What is the difference between CDNs and caching?

CDNs are geographically distributed networks of proxy servers and their objective is to serve content to users more quickly. Caching is the process of storing information for a set period of time on a computer. The main difference between CDNs and caching is while CDNs perform caching, not everything that performs caching is a CDN.

If you prefer to learn through videos, check out our YouTube channel.

What is caching?

One of the most impactful things publishers can use to improve their website’s speed is caching. Caching means that content is going to be stored somewhere so that it’s easily accessible and doesn’t have to make an external call back to the origin. This reduces the time for a visitor to access data on a website.

Web caching is the process of storing frequently accessed data or web content (such as images, videos, HTML pages, and other resources) on a local server or client-side device to reduce the time it takes to retrieve the content when it is requested again (like using a bookmark to quickly find the page you were reading). Caching helps to improve the speed and efficiency of web browsing, as it eliminates the need to retrieve the same data repeatedly from the original source.

Web caching works by storing a copy of the requested content in a cache. When a user requests the same content again, the cache can provide the content quickly without the need to retrieve it from the original source. This can reduce the amount of data that needs to be transferred, and the time it takes to load web pages, resulting in faster and more efficient browsing experiences for users.

Web caching can be implemented at different levels of the web architecture, including the client-side, server-side, and intermediary caching (such as a proxy server or content delivery network). There are also different caching techniques, such as time-based caching, where content is stored in the cache for a specified period, or conditional caching, where content is stored only if certain conditions are met.

Overall, web caching is an essential tool for optimizing web performance and improving user experience, as it helps to reduce latency, bandwidth usage, and server load while providing faster and more reliable access to web content.

Since Google has emphasized site speed and its effect on SEO in recent years, there’s been an explosion of caching plugins, widgets, and services that all promise to increase the speed of your website. What publishers don’t realize is that sometimes these types of caching plugins have the opposite effect on your site. Knowing the similarities and differences between the types of caching can be helpful to avoid bogging down your site.

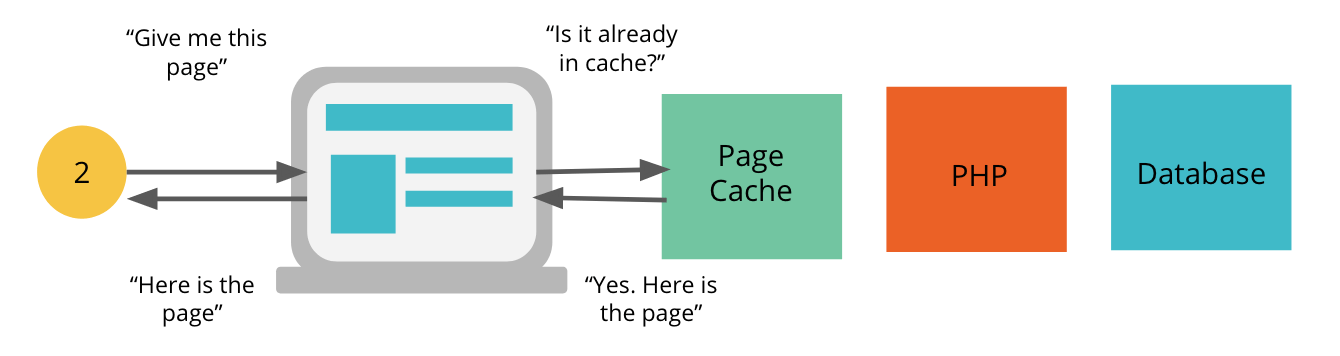

What is page caching (site caching)?

Page caching (also known as HTTP or site caching) stores data like images, web pages, and other content temporarily when it’s loaded for the first time. This data is stored in an unused portion of RAM and has no significant impact on memory.

When a visitor returns to the site again, the content can load quickly. The same way children can memorize multiplication tables (4 x 4), once the function is memorized, the answer can be recalled almost instantly.

Page caching is limited in the way it performs caching. It can only communicate how long to store the saved data. Publishers can set caching rules that make sure visitors see fresh content. This way, pages that haven’t been changed will still be served from the cache. If images or other content has been updated, it will be refreshed and then cached for later visits.

This happens a lot with WordPress. Publishers install one of the many caching plugins available (WP Rocket, W3 total cache, etc) and they now have caching. If the rules aren’t set correctly, you may have given yourself a slower site. Or, you’ve created a situation where your visitors aren’t seeing the most recent version of your site.

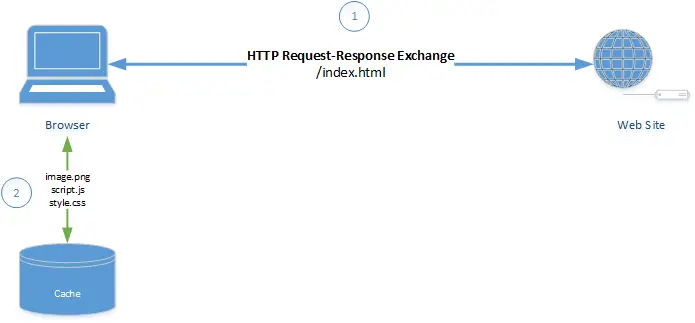

How does browser caching work?

Browser caching is a type of web caching that occurs on the client-side, where web content is stored in a user’s browser cache to reduce the amount of data that needs to be retrieved from the server when the same content is requested again. Here is a step-by-step explanation of how browser caching works:

- When a user visits a website, the browser sends a request to the server for the web page and its associated resources (such as images, CSS files, and JavaScript files).

- The server responds by sending back the requested content along with instructions on how long the content should be stored in the user’s browser cache (via HTTP headers such as “Cache-Control” and “Expires”).

- The browser stores a copy of the content in its cache, associating it with the URL of the page or resource.

- If the user visits the same page or requests the same resource again, the browser checks its cache to see if it has a copy of the content associated with that URL.

- If the content is present in the cache and the time specified in the HTTP headers has not yet expired, the browser uses the cached copy instead of requesting the content again from the server.

- If the content is not present in the cache, or the cached copy has expired, the browser sends a request to the server for the content, and the process starts over.

Browser caching can significantly improve website performance by reducing the number of requests made to the server and the time it takes to load web pages. However, it’s important to ensure that the caching settings are configured correctly to avoid serving stale content to users and to prevent caching sensitive data that should not be stored in the cache.

Browser caching makes the experience on the web much faster for the sites we visit regularly. Instead of requesting and sending the required data that is needed to display the webpage on your browser, it’s stored on your computer. Browser caching is also a type of page caching.

This way, if somebody has visited your website before on a browser, and they can be cookied. The rule you can set is, “if the content hasn’t changed, show the visitor the same version of the site they saw before.” This makes the web page load instantly and is a cached version of the page.

Browser caches store groups of files and content or later use. These types of files include:

- HTML/CSS pages

- JavaScript

- Images/Multimedia

Users can set or change the caching settings within their browser. All major browsers (Chrome, Firefox) use browser caching. Websites have the ability to communicate with a user’s browser. When pages are updated, the browser knows to replace the old content with the new content and save it in its place.

What are caching rules and how do you set them?

Caching rules are instructions that dictate how web content should be cached and when it should expire. These rules are set in the HTTP headers of the server response that is sent to the client’s browser when web content is requested. Caching rules are important because they help to optimize web performance by specifying when content should be retrieved from the cache instead of requesting it from the server.

There are several caching rules that can be set in the HTTP headers:

- Cache-Control: This header specifies the caching rules and directives for a particular resource. It can specify how long the content should be cached, whether it can be cached at all, and whether it can be stored in a shared cache or a private cache.

- Expires: This header specifies the date and time at which the cached copy of the resource will expire and should be refreshed from the server.

- ETag: This header provides a unique identifier for the resource, which can be used to check if the cached copy is still valid or if a new copy needs to be retrieved from the server.

- Last-Modified: This header provides the date and time when the resource was last modified, which can be used to check if the cached copy is still valid or if a new copy needs to be retrieved from the server.

To set caching rules, you need to modify the HTTP headers of the server response. The specific method for setting caching rules depends on the server platform being used. In general, you can set caching rules using configuration files or programmatically in server-side code.

For example, in Apache HTTP Server, you can use the “mod_expires” module to set caching rules in the .htaccess file or in the server configuration file. In Microsoft IIS, you can set caching rules in the web.config file or using the IIS Manager. In server-side code, you can set caching rules using the appropriate HTTP headers in the server response.

It’s important to set caching rules carefully to ensure that the cached content is not stale or inaccurate and that sensitive data is not cached. You should also regularly monitor and adjust the caching rules to ensure that they are optimized for your website’s specific requirements.

Caching rules allow the ability for publishers to set parameters for how often elements of your site are cached. If a visitor came to your home page earlier today, it doesn’t make sense for that request to call to the server for the same content. If the content hasn’t changed, delivering a cached version of your site allows it to load instantly. You want good browser caching rules for your users.

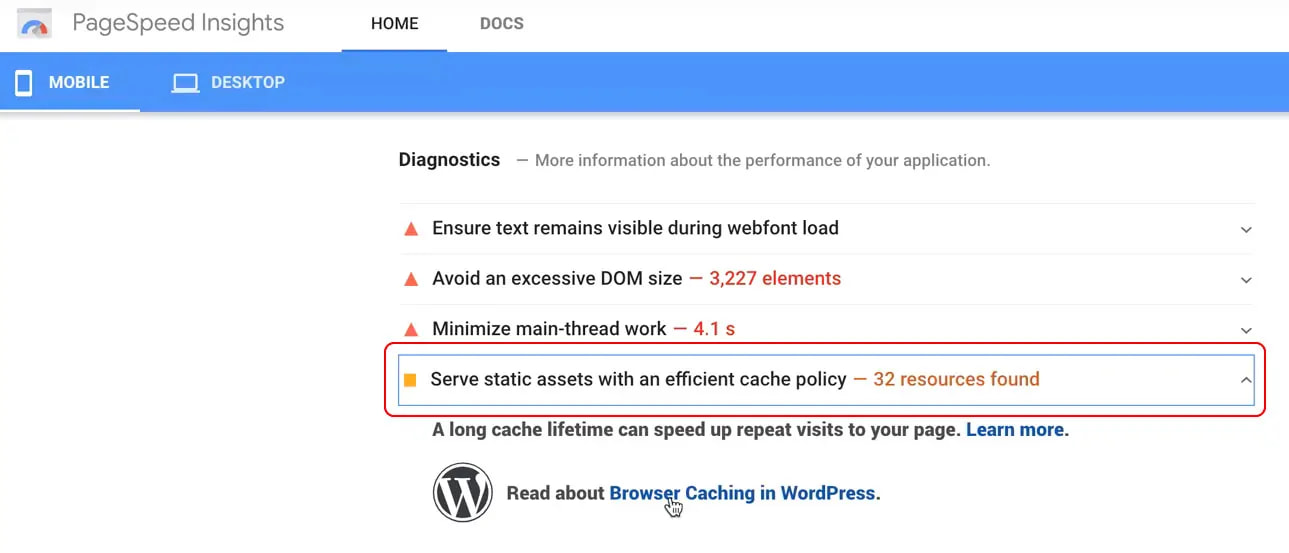

Most publishers are familiar with caching rules because of recommendations in Google Lighthouse performance audits.

Recommendations from Lighthouse to serve static assets with an efficient cache policy can help improve site speed and user experiences. Some publishers accomplish this with caching plugins. Others who know HTML can hand-code the “max-age” directive to tell the browser how long it should cache the resource (in seconds).

![]()

31557600 is one year to your browser. 60 seconds * 60 minutes * 24 hours * 365.25 days = 31557600 seconds.

You can also use the Cache-Control: no-cache code if the resource changes and newness matters, but you still want some of the benefits of caching. The browser will still cache the resource that’s set to no-cache, but checks with the server first to make sure the resource is still the same version before re-fetching.

For example: if you run a popular forum site and users are always adding new content, a good cache policy would have rules that refresh the cache often. Maybe even less than 30 minutes.

The CSS/HTML stylesheets of your site are good examples of elements that can have a “max-age” of up to a year.

You can also shave some time off your speed scores by pre-connecting to required origins.

How does a CDN work?

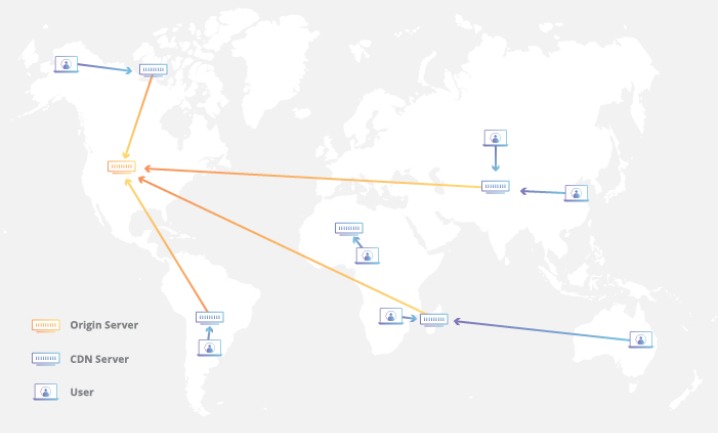

A CDN is specifically a network of proxy servers that are usually in multiple locations that cache website content. The goal of CDNs is to deliver content efficiently, and they act as a layer between the user and the server. This prevents all requests going to the same server. The CDNs proxy servers distribute the requests spatially in relation to the end-user across the globe.

A content delivery network (CDN) is a distributed network of servers located in different geographic locations around the world. The purpose of a CDN is to improve the performance, reliability, and scalability of delivering web content to users.

Here is how a CDN works:

- A user requests web content, such as a web page, image, or video, from a website.

- The request is routed to the closest server in the CDN network, based on the user’s geographic location.

- The CDN server caches the requested content from the origin server, which is the server that hosts the website.

- If the requested content is not present in the CDN cache, the CDN server retrieves the content from the origin server.

- The CDN server delivers the requested content to the user, using the fastest and most reliable route available.

By caching web content in multiple locations around the world, a CDN can reduce the distance that web content must travel to reach users. This reduces the latency and improves the speed of content delivery, resulting in faster and more reliable web browsing experiences for users.

In addition to caching content, a CDN can also provide other services such as load balancing, security, and analytics. Load balancing distributes incoming web traffic across multiple servers to ensure that no single server is overloaded. Security features such as DDoS protection and SSL/TLS encryption help to protect websites from attacks and ensure secure communication with users. Analytics tools provide insights into website performance and user behavior, which can help website owners optimize their content delivery strategies.

Overall, a CDN is an essential tool for improving the performance and reliability of delivering web content, especially for websites that serve a global audience or experience high traffic volumes.

There are many CDN services. The most popular are Cloudflare, Akamai, and MaxCDN (now StackPath).

What is caching on the edge with Cloudflare?

Cloudflare adds an additional layer of caching. You have the caching on your site that can happen on a users browsers, but then you have a CDN cache.

For example: A user has been to your site before, but the caching rule on your browser says, “This page has been updated.” This means the request will have to go to the origin server. If the user is in New York, and the origin server is in Singapore, that’s a long call. However, a CDN knows to retrieve the content from your site every time it’s updated. The updated site is now cached at the closest CDN server in Atlanta. This reduces load time significantly for subsequent visits.

Cloudflare has dedicated data centers that ISPs rent to them in more locations across the globe than what a typical CDN has. Cloudflare gives you this extra layer of caching called caching on the edge.

Caching on the edge with Cloudflare is a method of caching web content on Cloudflare’s global network of servers, which are located in different geographic locations around the world. This caching method is also known as “edge caching.”

When a user requests web content from a website that is using Cloudflare’s services, the request is first routed to the closest Cloudflare server based on the user’s geographic location. The Cloudflare server then checks if the requested content is available in its cache. If the content is available, the server delivers it directly to the user, without having to go back to the origin server. This reduces the time it takes for the content to load and improves the overall performance of the website.

Cloudflare’s edge caching also includes a feature called “dynamic caching,” which allows Cloudflare to cache content that is typically considered dynamic and is not normally cached by other caching systems. This feature is possible due to Cloudflare’s ability to execute server-side code at the edge of the network.

Cloudflare’s caching on the edge also provides other benefits, such as:

- Improved reliability and uptime: By caching content on its global network, Cloudflare can provide backup servers that can take over if the origin server goes down.

- Reduced server load: Caching on the edge reduces the number of requests that need to be served by the origin server, which can help to reduce server load and improve server performance.

- Enhanced security: Cloudflare’s caching on the edge includes features such as SSL/TLS encryption and DDoS protection, which help to improve the security and protection of the website.

Overall, caching on the edge with Cloudflare is an effective method of improving website performance, reliability, and security, especially for websites that serve a global audience or experience high traffic volumes.

Wrapping up the difference between CDNs and caching

The biggest differences between content delivery networks (CDNs) and caching are their scope and implementation.

- Scope: CDNs are a network of servers located in different geographic locations around the world, while caching is a method of storing web content on a user’s local device or on a server.

- Implementation: CDNs require a separate infrastructure and configuration, while caching can be implemented within a web application or server using caching rules and directives.

Here are some more specific differences:

- Geographic coverage: CDNs are designed to deliver web content to users across the world, while caching is typically used to improve performance for individual users or within a local network.

- Network architecture: CDNs use a distributed network of servers to cache and deliver content, while caching can be implemented using various types of storage such as local disk, memory, or a server-side cache.

- Performance benefits: CDNs can provide faster and more reliable content delivery by caching content in multiple locations, while caching can improve performance by reducing the number of requests to the origin server and delivering content faster from a local cache.

- Cost: CDNs can be more expensive to implement and maintain due to the need for a separate infrastructure and ongoing costs for network maintenance, while caching can be implemented using existing infrastructure and server resources.

In summary, CDNs and caching are both methods of improving the performance and scalability of delivering web content, but they differ in scope and implementation. CDNs are a network of servers located in different geographic locations around the world, while caching is a method of storing web content on a user’s local device or on a server. CDNs are designed for global content delivery, while caching is typically used to improve performance for individual users or within a local network.

It’s important to remember that while there is a difference between CDNs and caching, they share the purpose of making user experience on the web faster and more seamless.

Caching occurs when you use a CDN service. However, since CDNs are reverse proxies and sit like a layer between user and origin server, the caching speed is going to be greater than that of speed optimization plugins.

Plugins will always be a little bit slower due to the nature of how they’re built. The code that comprises these plugins are typically created by a third party. This code has to occasionally make external calls to wherever the plugin’s files are hosted. Whereas caching at the CDN-level (server-level) sits closer to the origin server, and has to make fewer requests. This speeds up a user’s experience and improves metrics like TTFB.

Ezoic is a Cloudflare partner. Our Site Speed Accelerator eliminates the need for a suite of speed plugins that bog down your site’s speed, while also delivering server-level caching speeds.

Do you have any questions on CDNs and caching? Let me know in the comments and I’ll answer them.